Feedback Collection Tools Compared: Forms vs. Video vs. Async (2026)

Comparing feedback collection tools by method—forms, video, and async options. Find the right fit for client reviews, user research, and team input.

Jon Sorrentino

Talki Co-Founder

Not all feedback is created equal. A customer satisfaction survey needs different tooling than design revision feedback, which needs different tooling than user research interviews. The feedback collection tools you choose should match the type of input you're trying to capture—and the people you're asking to provide it.

Over years of design leadership at PepsiCo, Barstool Sports, and VICE, I've reviewed thousands of pieces of client feedback—and watched countless projects derail because we chose the wrong collection method. The right tool changes everything about how clearly clients can communicate and how quickly you can move forward.

This guide compares the three main categories of feedback collection tools: form-based, video-based, and async annotation tools. If you're looking for methods beyond traditional surveys, we've covered seven alternative approaches that work better for different scenarios.

Quick Picks: Best Feedback Collection Tools by Use Case

Best for surveys at scale: Typeform, SurveyMonkey, Google Forms

Best for video feedback collection: Talki

Best for design annotation: Markup.io, Figma, Pastel

Best for client design reviews: Talki (video) or Markup.io (annotation)

Best for user research: Talki (async video), dedicated UX research platforms (moderated)

Best for website QA: Markup.io, Pastel

Form-Based Customer Feedback Collection Tools

Form tools collect structured responses through surveys, questionnaires, and input fields. They're the oldest category of customer feedback collection tools and remain the best choice when you need quantifiable data at scale.

When forms work well

Form-based feedback excels when you know exactly what questions to ask and want responses you can aggregate. Customer satisfaction scores (CSAT), Net Promoter Score (NPS), and multiple-choice preference surveys all benefit from form structure.

Strengths:

Scalable to thousands of respondents

Easy to analyze and visualize data

Benchmarkable over time

Low friction for quick responses

Anonymous options available

Representative tools: Typeform, Google Forms, SurveyMonkey, Jotform

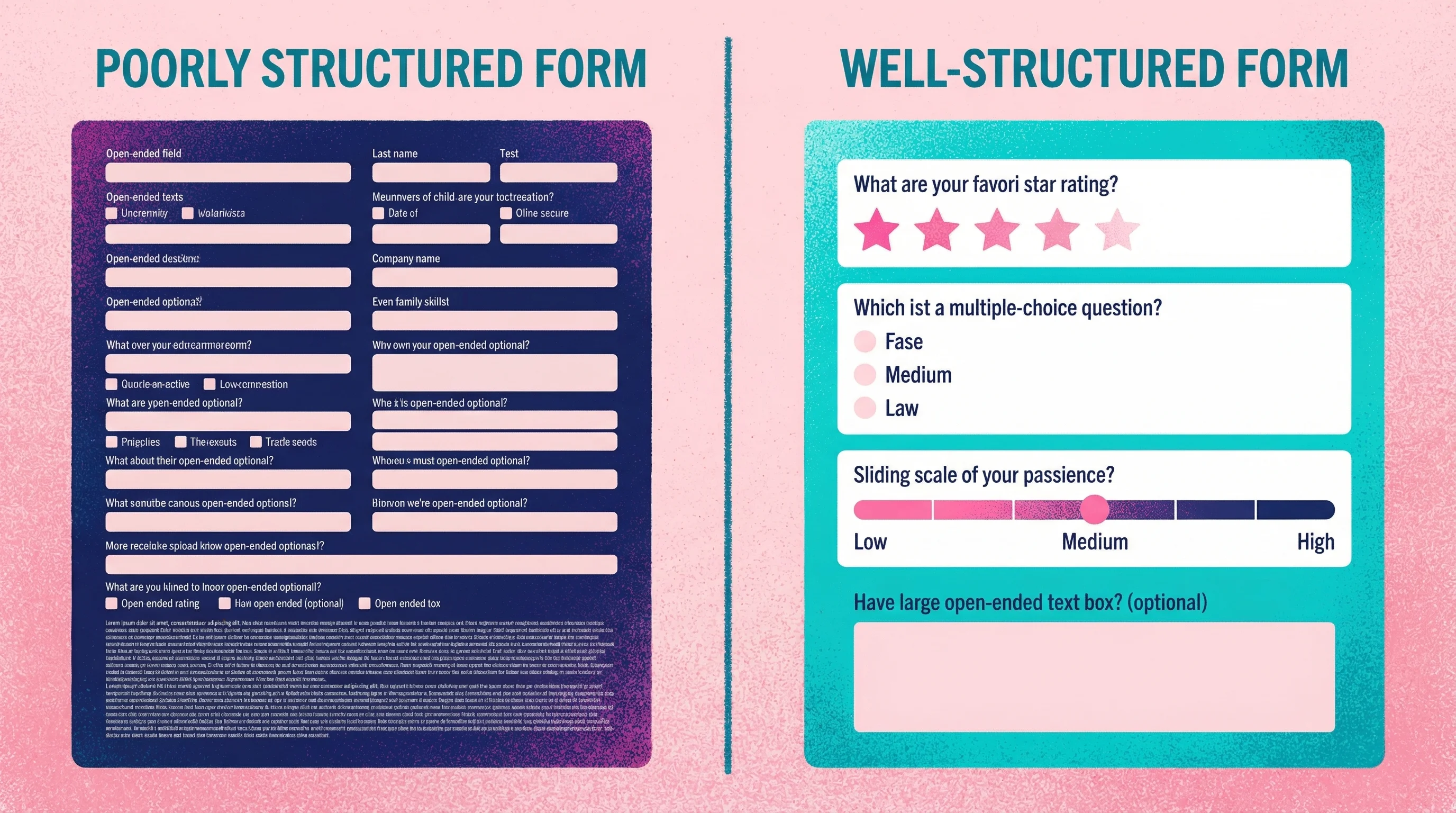

When forms fail

Forms fall apart when the feedback you need is nuanced, visual, or difficult to anticipate. Asking "what do you think of this design?" via a text field produces vague responses because the format forces complex reactions into unstructured text boxes.

I know plenty of designers and agencies that rely on forms to collect feedback. While it can be a reliable process, it's very outdated, and nobody gets excited about filling out a form. It's boring work for your clients.

The bigger problem is what happens without guardrails. I've seen forms become bloated with tons of different feedback fields—and even worse, when a client decides to go rogue and just sends an email instead of using your form, you get a wall of text that goes in circles. I once received feedback where the client kept writing and writing, essentially saying the same thing in different ways. When we finally got on a call, turns out they actually liked the logo we were working on. It was just one shade of blue that was throwing them off, and they wanted the typography handled differently. Because they were writing the feedback instead of talking through it, they got lost in their own thoughts and it became this meandering wall of text that took me an hour to decode.

This is exactly why written design feedback often fails—clients struggle to articulate visual reactions through text alone.

Limitations:

Can't capture visual context

Leading questions skew results

Open-ended responses are hard to parse at scale

Misses the "why" behind quantitative answers

Response fatigue reduces completion rates on long surveys

The survey length problem

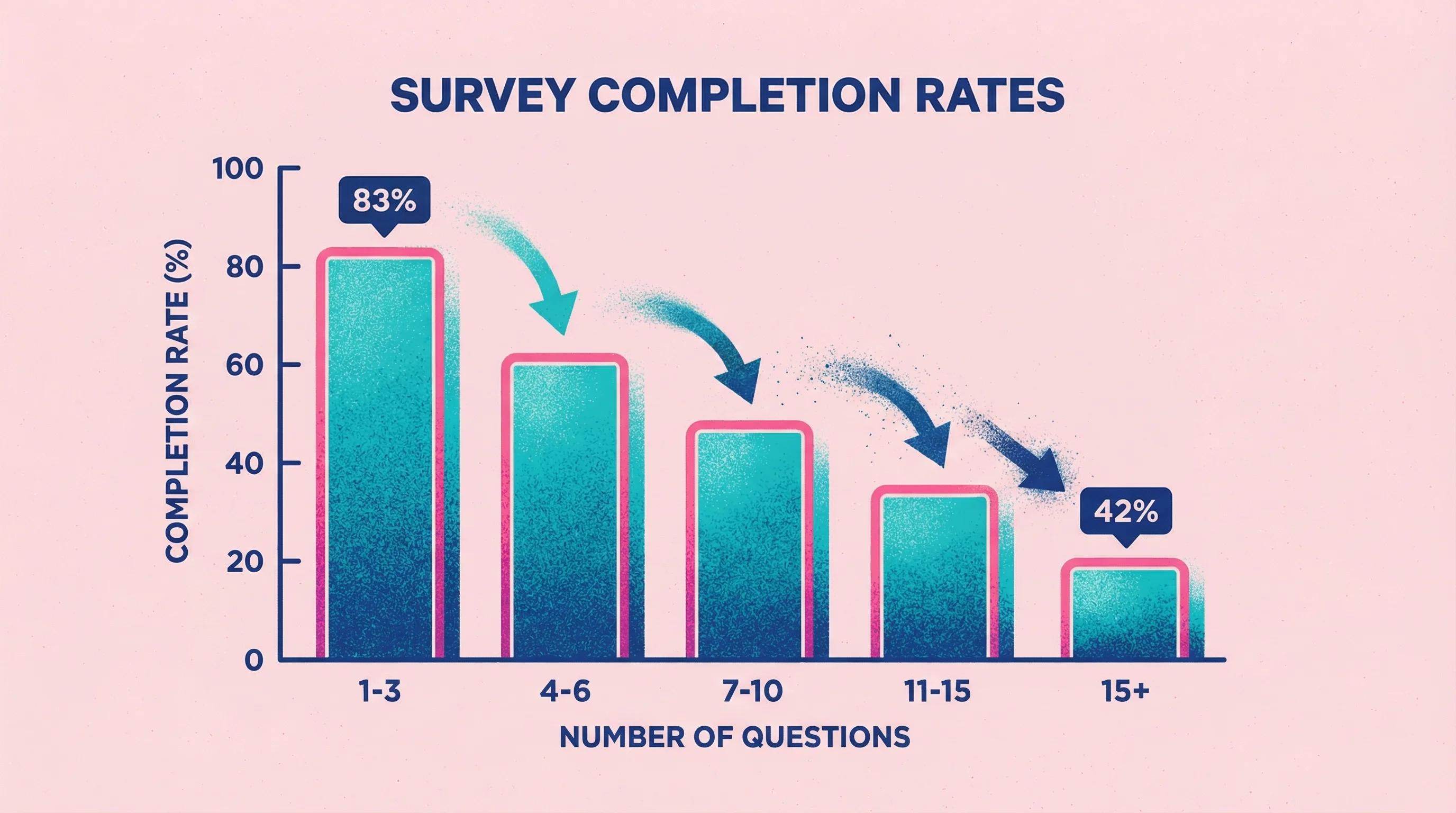

Survey fatigue is real and measurable. Research analyzing over 267,000 survey responses found that completion rates drop from 83% for 1-3 question surveys down to just 42% when you ask 15+ questions. Every additional question becomes another opportunity for respondents to abandon the feedback.

The problem is even worse on mobile. Studies show that surveys longer than 12 minutes on desktop or 9 minutes on mobile experience drastic drop-off rates. For product feedback tools collecting input from users on the go, this means keeping surveys brutally focused on what you actually need to know.

Best practices for form-based feedback

Keep surveys short. For most use cases, 5-10 questions is the maximum before abandonment spikes. If you need more comprehensive feedback, consider breaking it into multiple shorter surveys or mixing in other methods.

Mix quantitative and qualitative strategically. Lead with rating scales (easy for respondents), then follow with optional open-ended questions for those who want to elaborate.

Understanding NPS and CSAT scores:

Net Promoter Score and Customer Satisfaction metrics vary widely by industry, so context matters. Recent benchmark data shows average NPS ranges from around 16 in some sectors up to 80 in others (insurance leads at ~80, while tech and financial services typically sit in the 60s). For CSAT, anything above 70% is generally considered good, with most industries clustering in the 65-80% range.

While high scores often correlate with better business performance—Bain & Company research found that NPS leaders grew roughly 2x faster than competitors—these metrics should be interpreted carefully. They're useful benchmarks but work best when combined with deeper qualitative feedback rather than treated as standalone measures of success.

Video-Based User Feedback Collection Tools

Video feedback captures reviewers talking through their thoughts while showing their screen or pointing at specific elements. It's the closest digital approximation to an in-person review session.

When video works well

Video feedback shines when context and nuance matter more than scale. Design reviews, client revisions, and user research all benefit from seeing exactly what someone is looking at while they explain their thinking.

Strengths:

Captures tone, emphasis, and emotion

Shows exactly what reviewers are referencing

Reveals hesitations and uncertainties text hides

Natural for reviewers (talking is easier than writing for most people)

Creates a record that's easy to revisit

Representative tools: Talki (for collecting video feedback), Loom (for sending video messages), VideoAsk (for interactive video surveys)

If you're specifically looking for Loom alternatives for feedback collection, we've compared 15 options that handle the collection workflow better.

Why video communicates more than text

The difference between video and text feedback isn't just preference—it's measurable. Research comparing feedback modes found that video feedback significantly improved perceived quality, particularly on understandability and encouragement. Participants receiving video responses considered them more personal and easier to grasp because non-verbal cues (facial expressions, gestures, vocal tone) provide context that text alone can't convey.

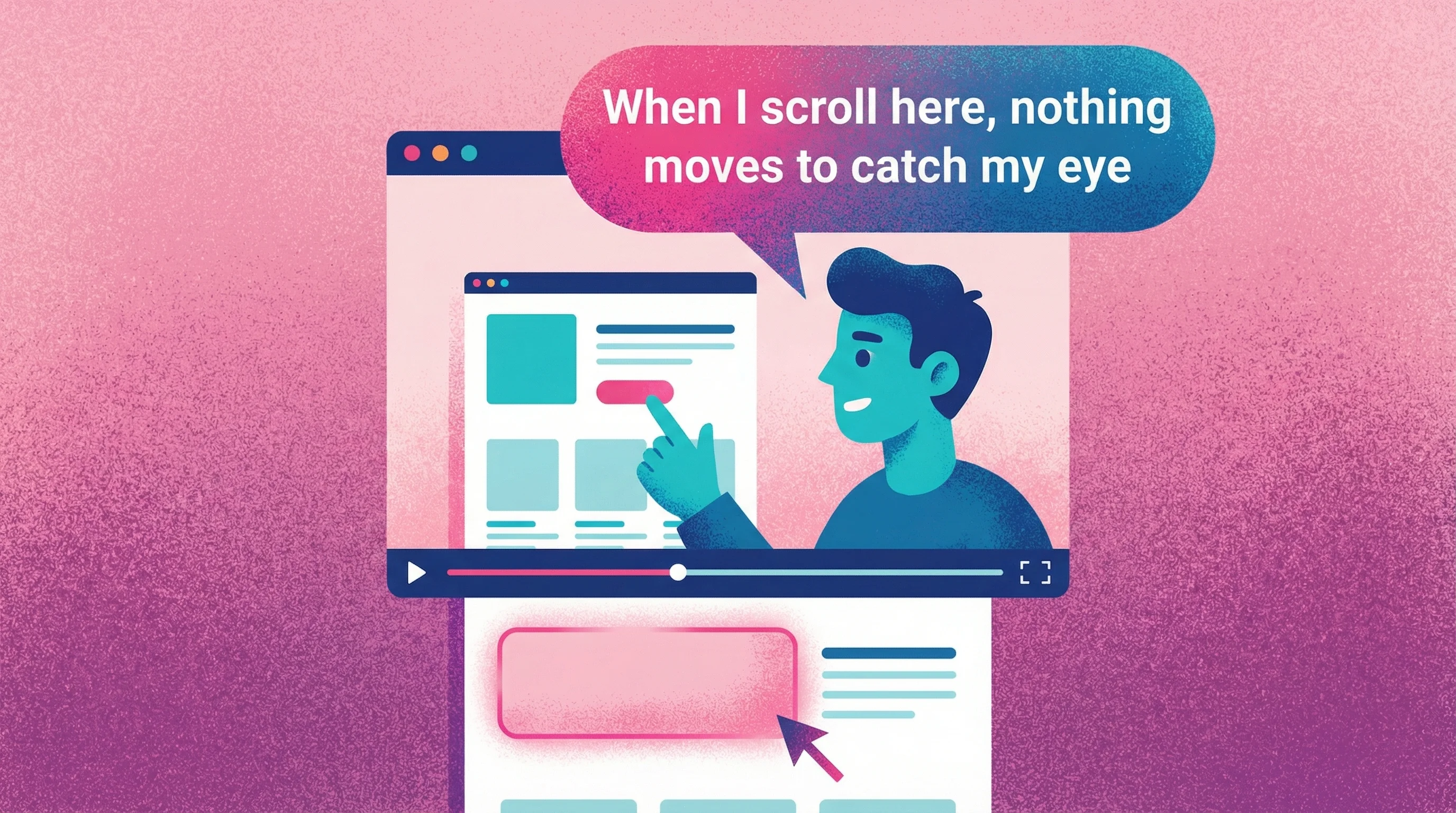

This gap is exactly why I built Talki. After years of trying to decode written feedback like "this doesn't feel premium enough" or "can we make it more dynamic?", I realized clients weren't being vague intentionally—they just couldn't articulate visual reactions in writing. When I started asking them to record quick videos instead, suddenly "more dynamic" became "when I scroll, nothing moves to catch my eye" with them literally pointing at the static hero section. The clarity was instant.

Studies also show that people tend to give lengthier, more detailed input via video. Video feedback comments are typically longer and more supportive, while text feedback tends to be shorter and focused on specific critiques. This makes video particularly valuable for client feedback tools where understanding the "why" behind reactions matters as much as the "what."

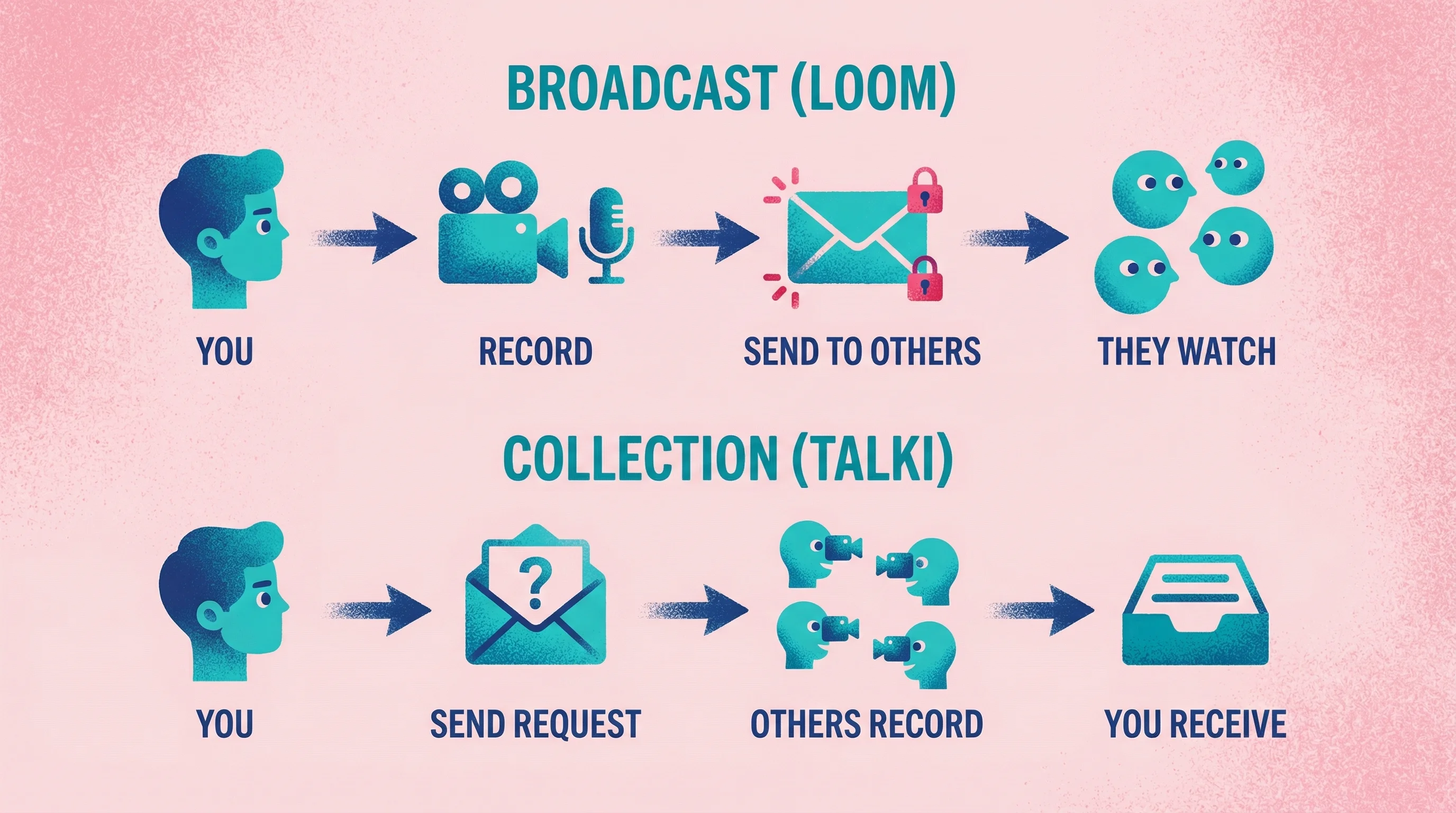

The collection vs. broadcast distinction

Most video tools are designed for broadcasting—you record and send videos to others. But feedback collection requires the opposite flow: others need to easily record and send videos to you.

This asymmetry matters. Loom is excellent for explaining your work to clients, but asking clients to record Loom responses means asking them to create accounts and learn a new tool. The friction kills response rates.

Talki addresses this specifically: Recipients click a link, record their feedback, and you receive the video—no accounts or setup required on their end. The AI transcription means you can skim written summaries when you need quick context, then watch full videos when nuance matters.

When video feedback fails

Video doesn't scale efficiently. Watching 50 video responses takes far longer than scanning 50 form submissions. For quantitative research or high-volume user feedback tools, video adds overhead that isn't justified.

Unlike survey data that can be aggregated quickly, each video must be watched—often multiple times—to extract insights. Analyzing large volumes of video responses is labor-intensive, requiring significant time investment that text-based feedback doesn't demand. Organizations using video at scale need to budget for analyst hours or AI tools to manage the review process.

Video also requires more from respondents, even with low-friction tools. Recording video demands finding a quiet space, using a camera, and being comfortable on video—factors that can deter some people from participating. Someone can complete a five-question form in 30 seconds; recording a video takes at least a minute or two. For quick pulse checks, that's excessive.

Limitations:

Time-intensive to review at scale

Requires more effort from respondents

Harder to aggregate and compare responses

Storage and organization can get unwieldy

Some respondents are camera-shy

Yields unstructured data that resists quantitative analysis

Async Annotation and Comment Tools

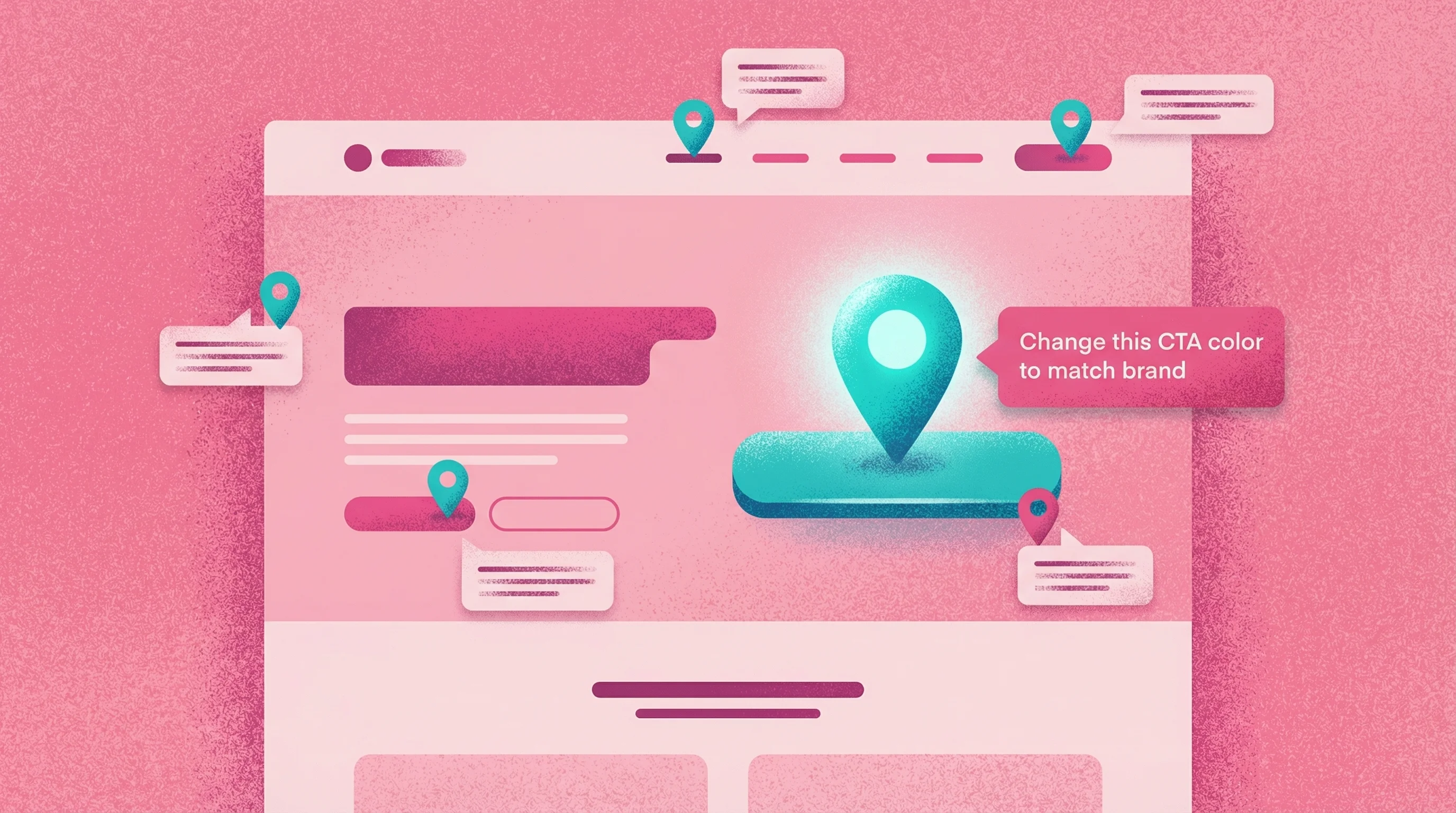

Annotation tools let reviewers leave comments pinned to specific locations on designs, websites, documents, or other visual content. They solve the "where exactly?" problem that plagues written feedback.

When annotation works well

Annotation tools excel for design feedback on static visuals or live websites. When you need to know precisely which element someone is referencing, the ability to click and comment beats paragraph descriptions.

Strengths:

Precise location of feedback

Context stays attached to the element

Easy to track resolution of specific issues

Works well for detailed, technical reviews

Supports collaboration among multiple reviewers

Representative tools: Markup.io, Pastel, Figma comments, InVision, Filestage

For design-specific projects, we've compiled the best design feedback tools that combine annotation with other collaboration features.

The speed advantage of visual feedback

Annotation tools dramatically accelerate feedback cycles by eliminating ambiguity. Research on bug tracking shows that bugs reported with annotated screenshots or screen recordings get fixed about 25% faster than text-only bug reports. The visual context helps developers immediately see the issue without back-and-forth clarification.

Teams using integrated annotation workflows report resolving issues almost twice as fast as those relying on written descriptions alone. Instead of receiving "20 emails with conflicting feedback," centralized annotated reviews show exactly what each stakeholder is referencing. This reduces misinterpretation and prevents errors caused by working on outdated information.

When annotation fails

Annotation solves the location problem but not the description problem. Reviewers can show you exactly where they have feedback, but they still need to articulate what's wrong in writing. "This feels off" pinned to a specific button is more useful than "this feels off" in an email, but it still leaves you guessing.

Limitations:

Still relies on written articulation

Can feel cluttered on complex designs

Less effective for motion or interactive content

Some tools require accounts or setup

Doesn't capture tone or nuance

Side-by-Side Comparison

Factor | Forms | Video | Annotation |

|---|---|---|---|

Best for | Quantitative data, surveys, large audiences | Nuanced feedback, client reviews, user research | Design reviews, website feedback, document editing |

Captures visual context | No | Yes (screen recording) | Yes (pinned locations) |

Captures tone/emotion | No | Yes | No |

Scalability | Excellent | Poor | Moderate |

Respondent effort | Low | Moderate | Low-Moderate |

Analysis effort | Low (quantitative) to High (open-ended) | High | Moderate |

Friction for respondents | Very low | Low-Moderate | Low-Moderate |

Tool recommendations by use case

Client design reviews: Video (Talki) or annotation (Markup.io, Figma)

Video captures the "why" behind feedback; annotation works when issues are specific and locatable. For clients who consistently struggle to articulate feedback, video usually produces better results. Learn more about which video feedback tools actually get used in our head-to-head comparison.

Customer satisfaction: Forms (Typeform, SurveyMonkey)

Quantitative NPS or CSAT scores need the structure and scalability of form tools. Add optional open-ended questions for qualitative color.

User research: Video (Talki for async, dedicated UX research tools for moderated)

Watching users interact with your product while explaining their thought process reveals insights surveys can't capture.

Website QA and bug reports: Annotation (Markup.io, Pastel)

Testers need to show exactly where bugs occur. Pinned comments with screenshots are more actionable than text descriptions.

Team feedback on documents: Annotation (Google Docs comments, Filestage)

Inline comments keep feedback attached to specific passages, making review and resolution straightforward.

Choosing the Right Tool for Your Use Case

Start with what you're trying to learn

Before evaluating tools, clarify the feedback you need. Are you measuring satisfaction (forms)? Understanding detailed reactions (video)? Identifying specific issues with specific elements (annotation)?

Consider your respondents

Who's giving feedback changes which tool works best. Internal team members can handle more complex tools; external clients need zero friction. Technical users might prefer precise annotation; non-designers often communicate better through video.

The friction factor

Requiring users to create accounts or navigate multi-step processes dramatically lowers participation rates. Research confirms that surveys which are easy to access—no login required—see significantly higher response rates. For external stakeholders like clients reviewing designs, an account barrier can be fatal.

I learned this lesson the hard way. On one project, I sent a Loom link to 3 stakeholders asking for feedback on design concepts. Only 1 actually had Loom already and sent a video response. The other two? Radio silence. When I followed up, they admitted they didn't want to create yet another account just to leave feedback. The tool became the obstacle instead of the solution.

Every additional step in a feedback process is a potential exit point. If users must install a browser extension, grant permissions, or fill out lengthy onboarding before they can comment, many will quit before finishing. Web surveys shown to already-logged-in users can achieve response rates of 60-70%, versus much lower rates for surveys requiring separate login or sign-up.

The best client feedback software eliminates these barriers entirely. Embedded feedback widgets, guest responses, and simple email links to quick forms get far more uptake than asking people to learn a new tool login.

Factor in volume

How much feedback will you collect? High-volume scenarios favor forms for efficient aggregation. Low-volume, high-stakes feedback (like client revisions) justifies video's higher review time.

Account for your workflow

Where does feedback fit in your process? Tools that integrate with your project management stack reduce friction. Standalone tools with export options work when you need flexibility.

The hybrid approach

Many teams use multiple feedback methods depending on the stage and type of input needed. Combining feedback modes—surveys plus video interviews plus observational studies—produces more reliable and well-rounded insights than any single method alone.

UX researchers call this triangulation: using multiple data sources to enhance the credibility of a study. The core idea is that every method has limitations—surveys might lack depth, interviews lack scale, analytics lack context—but using them together lets one method's strength compensate for another's weakness.

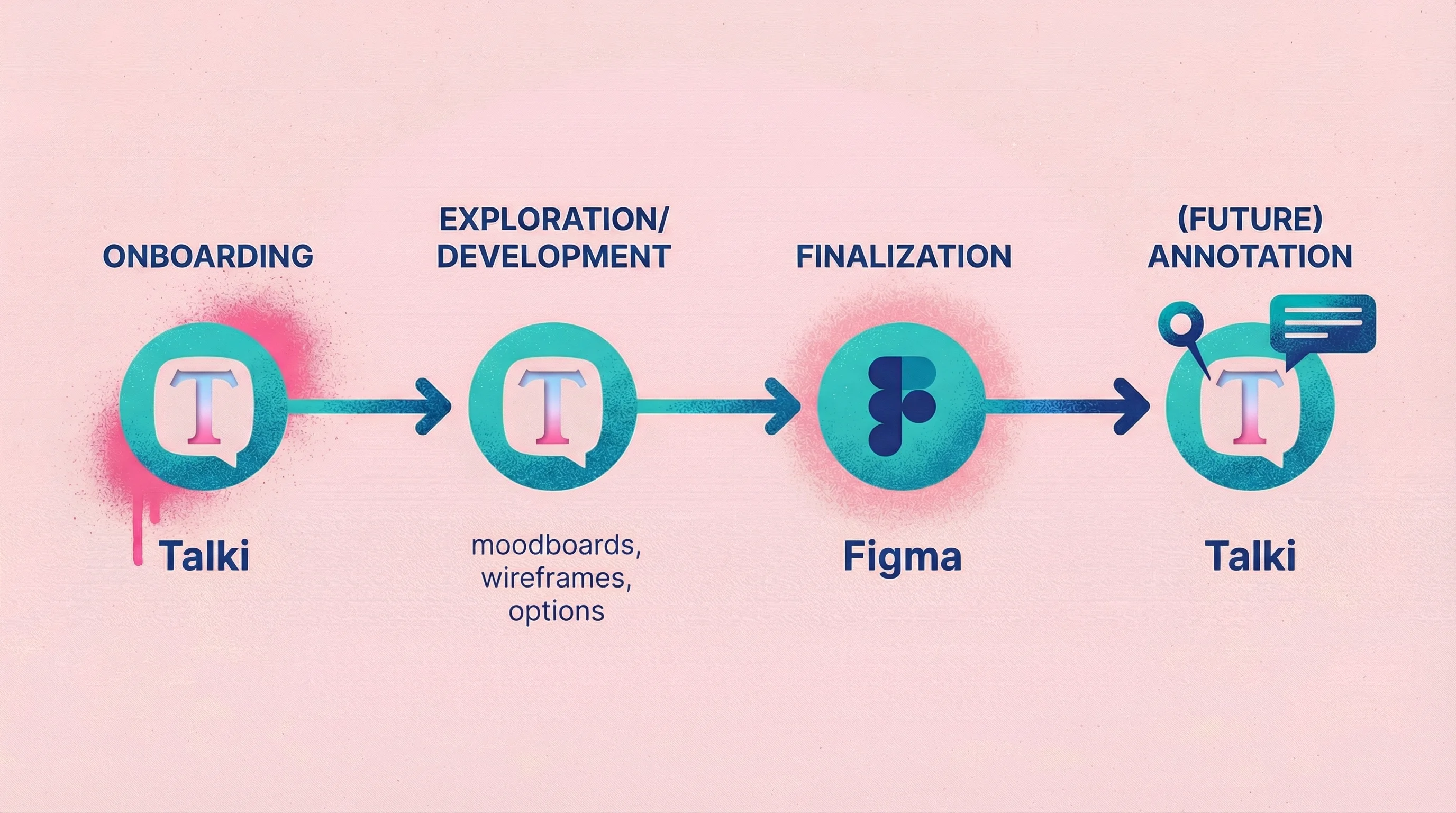

In my own projects, I've evolved to a stage-based approach. I use Talki for client onboarding—this allows me to warm up the client and not worry about staying within the questions of a form. It lets them speak freely and openly, and sometimes get weird. The good type of weird that gives a designer like me ammunition to really push the project to a new place.

I continue using Talki throughout the project when we're in the development phase—think moodboards, wireframes, options. Anywhere we're exploring and I need to understand reactions and thought processes, not just spot specific issues. Then when it comes down to finalizing small details in development or final phases, I switch to Figma comments for precise, technical feedback on specific elements.

This will soon change once we build out annotation tools within Talki so I can keep my feedback collection in one place, but the principle remains: match the tool to the stage and type of feedback you need.

Example workflow for a design agency:

Initial research: Video feedback from stakeholders about brand preferences

Concept feedback: Annotation on design comps

Revision rounds: Video collection for nuanced client reactions

Final approval: Simple form confirmation

This mixed approach not only increases confidence in findings but often uncovers insights that would be missed by a single method. When different methods converge on the same conclusion, stakeholders trust the results more, easing buy-in for product decisions.

FAQ

What's the best feedback collection tool for small teams?

For versatility on a budget, combine a free form tool (Google Forms or Typeform's free tier) with a video feedback tool free option like Talki. This covers both quantitative surveys and nuanced feedback without significant cost.

How do I get more people to complete feedback requests?

Reduce friction. Every click, field, and required account reduces completion rates. For video, use tools like Talki that don't require respondent accounts. For forms, keep surveys under 5 minutes. For annotation, send direct links that don't require logins.

Can I use video feedback for user research?

Yes—async video feedback works well for remote user research where synchronous sessions aren't practical. Ask users to record themselves completing tasks while thinking aloud. Tools like Talki make it easy to collect these recordings without scheduling live sessions.

How do I organize feedback from multiple sources?

Most teams find that consolidation matters more than tool choice. Pick one primary location (your project management tool, a dedicated feedback folder, or your feedback tool's dashboard) and route all input there. Scattered feedback across email, Slack, and various tools creates chaos regardless of individual tool quality.

Conclusion

Forms scale, video captures nuance, and annotation shows location. The right feedback collection tool depends on what you're trying to learn, who you're asking, and how much feedback you'll receive.

For most teams dealing with client feedback on visual work, video collection fills a gap that forms and annotation leave open—the ability to hear exactly what someone means while seeing what they're looking at.

Test the fit. Most tools offer free tiers. Try your top candidate on a real project before committing, and pay attention to how respondents react to the experience, not just the feedback you collect.